Trust & Safety has quietly become one of the most critical - and complex - challenges facing online platforms today. As communities scale user-generated content globally, in real time, the systems that protect users, creators, and platforms themselves can no longer be reactive or invisible.

That’s why we’re excited to welcome Sharon Fisher as an Advisor at Checkstep. With more than a decade of experience spanning Disney, Microsoft, Riot, Roblox, Nintendo, Scopely, and Keywords Studios, Sharon has worked at the intersection of community, moderation, and technology - helping some of the world’s largest platforms and gaming companies operationalize trust at scale.

In this conversation, Sharon shares her perspective on how Trust & Safety has evolved, why it’s still misunderstood, and what it will take for the industry to move from reactive moderation to proactive safety design.

1. You’ve spent over a decade working at the intersection of gaming, community, and Trust & Safety. What’s the biggest shift you’ve seen in how the industry thinks about T&S today?

The biggest shift is that Trust & Safety is no longer invisible. Ten years ago, moderation and safety were mostly reactive and buried deep in operations. Today, they’re increasingly recognized as core to product health, brand reputation, and long-term growth.

That said, we’re still mid-transition. Many companies intellectually understand the importance of Trust & Safety, but structurally it’s often underpowered, under-resourced, or introduced too late, sometimes folded into other roles. In practice, the maturity gap is most often driven by financial prioritization, limited understanding of available technology, and internal communication gaps between product, operations, and leadership.

2. Gaming communities are uniquely global, emotional, and fast-moving. What makes Trust & Safety in gaming fundamentally different from other digital spaces?

Games are not passive platforms, they are persistent, real-time environments. Players are competing, cooperating, forming online relationships, and investing time, money, and identity. That intensity amplifies both positive experiences and potential harm.

Unlike social media, gaming violations often happen live, across voice, text, and gameplay mechanics simultaneously. Moderation decisions can directly affect outcomes, social dynamics, and player experience in the moment. Add global audiences, cultural nuance, and constantly evolving slang, and you’re operating in one of the most complex real-time digital ecosystems.

3. Trust & Safety is still often treated as a cost center. From your experience, how does strong community and moderation strategy actually drive engagement, loyalty, and revenue?

This is the billion-dollar question that has challenged the Trust & Safety industry for years: how do we demonstrate ROI?

What has become clear is that the shift happens when Trust & Safety is measured by business outcomes, not activity metrics or volume alone. Moving from “doing good” to demonstrating business impact is essential.

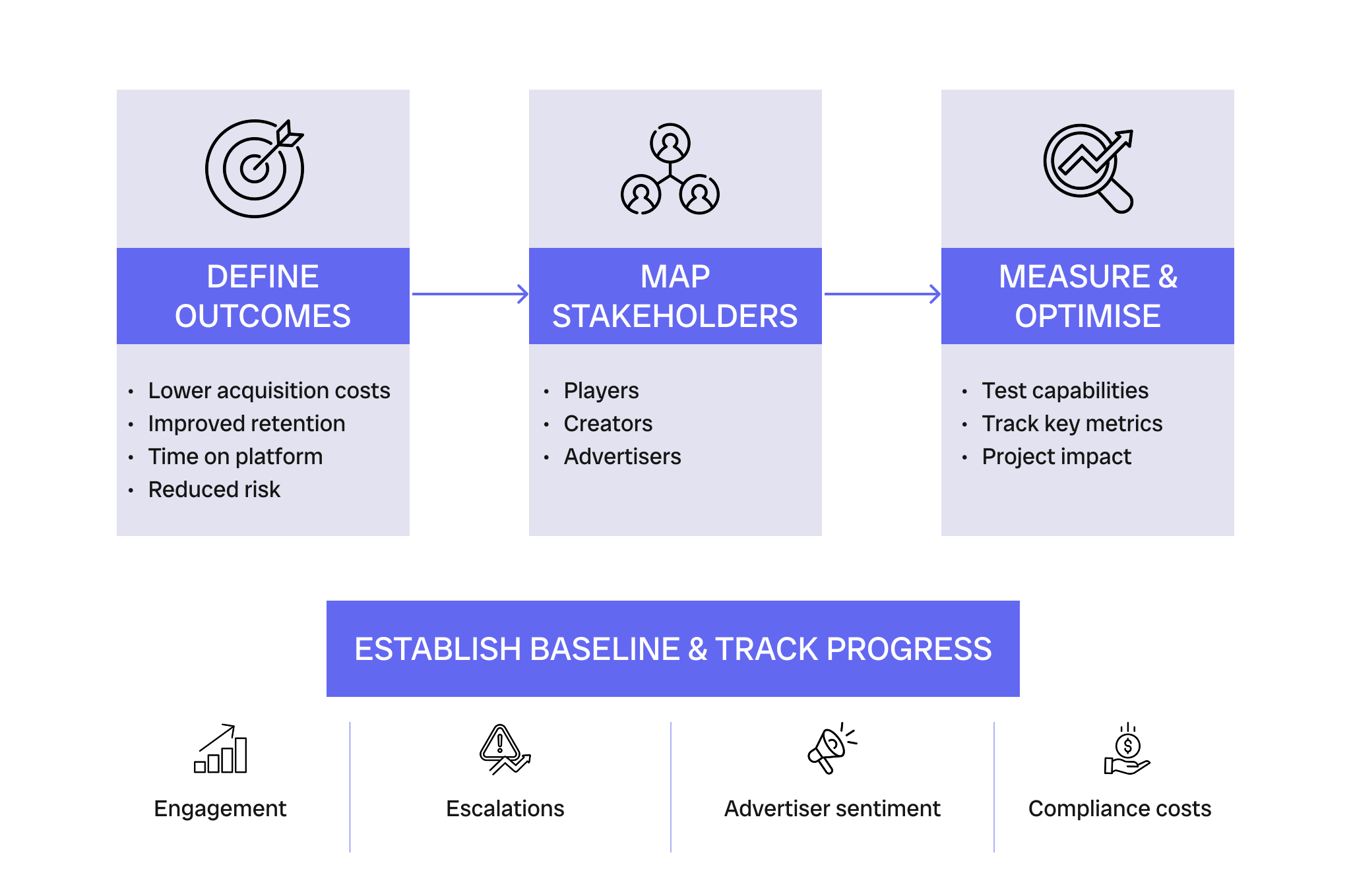

I typically recommend a structured framework that brings multiple stakeholders together, aligns on shared KPIs, and creates a unified understanding of Trust & Safety’s impact across the organization.

Step 1

Teams should start by defining outcomes leadership already cares about, such as lower customer acquisition costs, improved retention, increased time spent on platform, and reduced regulatory risk. Trust & Safety may not own these outcomes outright, but it directly influences the behaviors that drive them and can meaningfully contribute to these metrics.

Step 2

Organizations need to map the stakeholder groups affected by safety decisions. Players engage longer when they feel safe to be themselves and are treated fairly. Creators invest more when their work isn’t undermined by harassment. Advertisers are more willing to spend when brand risk is well managed. Moderation and community strategy directly shape these behaviors.

Step 3

Once behaviors are clear, teams can evaluate which Trust & Safety capabilities are actually moving the needle. This might include clearer policies that improve consistency, better tooling that reduces false positives, or feedback mechanisms that increase user trust in enforcement. The key is testing specific capabilities against specific outcomes, rather than treating Trust & Safety as an isolated function.

Establishing a baseline is critical. Before making new investments, teams need a clear view of current engagement, churn, escalation rates, advertiser sentiment, and compliance costs. Without this baseline, Trust & Safety will continue to look like a cost, simply because there is nothing to measure progress against. Some of the most important safety outcomes, such as escalations involving child safety, are deeply impactful but rarely visible in standard performance dashboards.

From there, organizations can project benefits using historical data, user research, and industry benchmarks. Even small improvements in perceived fairness or response quality can materially change user behavior, increasing session length, reducing churn, and stabilizing communities.

There are concrete examples of this in practice:

- Microsoft’s Xbox enforcement strike system has shown that when player reports result in an enforcement strike, 98% of players demonstrated improved behavior and did not receive subsequent enforcement actions.

- Riot Games’ behavioral systems updates have shown measurable, player-reported improvements in perceptions that behavior systems protect the game experience and discourage negative conduct.

When baseline data is combined with projected behavior shifts, Trust & Safety becomes quantifiable. Teams can estimate returns, set targets, and have credible conversations with finance and leadership about why these investments matter. At that point, Trust & Safety moves from being a defensive function to a growth enabler, creating the conditions for healthy engagement, loyalty, and revenue to scale.

4. You’ve helped companies reduce human and operational costs while scaling community efforts. What separates organizations that do T&S well from those that struggle?

The strongest organizations design Trust & Safety as a system, not just a set of workflows.

They align policy, tooling, data, and people from the start. They invest in moderator feedback loops, realistic success metrics, and tooling that supports human judgment rather than working against it. Trust & Safety is embedded cross-functionally rather than operating in isolation.

Organizations that struggle are often structurally siloed, pursue automation without a solid foundation, or outsource critical decision-making without maintaining internal ownership. Effective Trust & Safety programs are intentional, iterative, and collaborative across teams.

5. What’s a common misconception about moderation or community management that leaders need to rethink right now?

A common misconception is that moderation is a low-complexity, low-ROI, interchangeable function.

In reality, moderation is high-stakes decision-making that directly affects user trust, brand risk, regulatory exposure, and platform stability. Moderators make judgment calls under time pressure, across cultural and linguistic contexts, often with incomplete information and insufficiently aligned tools.

When moderation is treated as low-skill or easily replaceable, organizations see predictable outcomes: inconsistent enforcement, higher error rates, increased appeals and escalations, elevated regulatory risk, and significant operational churn. These costs rarely appear as line items in Trust & Safety budgets, but they surface downstream in brand damage, user attrition, and compliance challenges.

Leaders need to recognize moderation as a core safety operation, not a clean-up function. Investing in the right people, training, tooling, and decision frameworks is not only about protecting moderators, it’s about protecting the business’s bottom line and reputation.

6. As platforms scale, tooling, policy, and people all have to evolve together. Where do you see the biggest opportunities for innovation in Trust & Safety over the next few years?

The biggest opportunity lies in hybrid systems that combine AI with human expertise more effectively.

We need better signal quality, greater transparency into automated decisions, and tooling designed with moderation practitioners, not just engineers, in mind. There is also significant opportunity in prevention and early intervention, such as in-product parental education, friction design, and clearer behavioral expectations, not just enforcement after harm occurs.

The future of Trust & Safety is less about volume and more about prevention, data visibility, and precision.

7. What drew you to work with Checkstep, and where do you think we can make the most meaningful impact with us?

At the beginning of my career, I worked on early moderation efforts at Club Penguin at Disney, where I learned firsthand how safety, community trust, and product development are deeply interconnected. I later joined Two Hat, later Community Sift, at Microsoft, where I worked on moderation technology for gaming consoles, social platforms, and online communities, experiencing their challenges between operations and technology first-hand.

When Keywords Studios asked me to build their Trust & Safety department, I had the opportunity to bring together moderators, corporations, and technology providers into a more cohesive operating model.

Over the past year, I’ve transitioned into independent consulting, with Scopely and the Monopoly Go chat team as early clients, where I once again focused on connecting human moderation with filtering and AI-driven technology.

Taken together, these experiences shaped a clear point of view: the next phase of Trust & Safety will be won by platforms that can operationalize trust at scale, through systems where policy, people, and technology are designed to work together.

That perspective has guided how I choose where to focus my work today. What drew me to Checkstep is the clarity of purpose and the willingness to engage with complexity rather than oversimplify it. The greatest opportunity lies in helping platforms move from reactive moderation to proactive safety design, where AI and human moderators work together effectively. This includes improving policy clarity, supporting moderators with better tools and training, and helping companies understand how safety decisions affect real users at scale.

Checkstep is uniquely positioned to bridge the gap between trust, technology, and human judgment.

8. If there’s one mindset shift you’d like leaders in T&S to adopt in 2026, what would it be - and why?

If there’s one mindset shift I’d like leaders in Trust & Safety to adopt in 2026, it’s this: stop viewing safety as a cost to manage and start designing it as a system that enables scale, growth, and margin.

Leaders today are under real pressure to do more with less, to grow faster without increasing headcount, and to protect margins in increasingly competitive markets. Trust & Safety cannot sit outside of those realities, it has to be part of the solution.

AI will play a critical role in that future, but its complexity, integration, governance, and ongoing maintenance are still very much “up in the air” for many organizations. Most teams are not yet equipped to navigate that complexity on their own, especially when safety, regulation, and user trust are on the line.

The opportunity lies in building systems where AI and human expertise are intentionally designed to work together, reducing operational cost while increasing precision, consistency, and trust. That’s where Trust & Safety becomes a strategic advantage rather than a constraint.

For leaders looking to scale responsibly in this next phase, the conversation needs to shift from whether to invest in Trust & Safety to how to do it in a way that supports sustainable growth. That’s exactly where Checkstep can help, by partnering with platforms to operationalize trust at scale while navigating the complexity of modern safety challenges.

Welcoming Sharon Fisher to Checkstep

As online platforms face increasing pressure to scale responsibly, protect margins, and navigate regulatory complexity, Sharon’s perspective underscores a critical truth: Trust & Safety is no longer optional, and it cannot sit on the sidelines of growth conversations. When policy, people, and technology are intentionally designed to work together, safety becomes a strategic advantage rather than a constraint.

Over the next six months, Sharon will be working closely with Checkstep to help platforms move beyond fragmented approaches toward systems that operationalize trust at scale - combining human judgment with AI in ways that are both effective and sustainable.

Sharon has an extensive background in gaming and social platforms, with deep experience building and scaling global safety programs across policy, operations, and technology. She is joining Checkstep as an advisor, supporting clients as they navigate platform risk, AI-powered moderation, and the growing regulatory and operational demands of online communities.

Previously, Sharon built Trust and Safety operations from the ground up at Keywords Studios, where she established and scaled a global team of approximately 250 Trust and Safety professionals across multiple regions. Her work focused on operational rigor, quality frameworks, moderator wellbeing, and aligning safety outcomes with business objectives at scale. Following Keywords Studios, Sharon launched her own advisory practice in response to industry demand for senior Trust and Safety leadership. During this period, Scopely engaged her to help formalize and prepare Trust and Safety operations for the global launch and scale of Monopoly GO! chat, one of the world’s most awaited and used mobile game social features.

Earlier in her career, Sharon worked on early online moderation initiatives at The Walt Disney Company and later on moderation technology and strategic partnerships at Two Hat, collaborating with platform and game studios including Supercell, Minecraft, Twitch, and Amazon Games through trust-based, transparent partnerships. Sharon joins Checkstep at a time to “complete” the circle and help past Two Hat customers discover the new proactive AI-driven, human informed moderation era.

Build safer online platforms by design

If you’re a Trust & Safety or community leader and want to explore how scalable moderation can support community management, talk to us. We help online platforms design safety systems across text, voice, video live chat, and UGC - at scale.