Checkstep’s technology team worked closely with customers to develop new capabilities, including features making it easier to adapt chat models, new tools to fight novel child sexual abuse material, and new tools to assess the quality of human moderators and automation models. It’s easier than ever to manage end-to-end content analysis, moderation, and transparency and compliance with Checkstep.

The latest capabilities in AI scanning

New novel Child Sexual Abuse Material (CSAM) detection capabilities

In partnership with Resolver, Checkstep integrated a brand new detection service that specializes in the detection of novel (unknown) CSAM. This solution, trained on the UK Government’s Child Abuse Image Database (CAID), allows Checkstep customers to uncover new CSAM on their platform alongside the hashmatching services provided by the Canadian Centre for Child Protection’s Project Arachnid (known CSAM detection). The fight against child abuse material is critical for any platform hosting image or video content and Checkstep’s partnership with Resolver’s Athena model gives customers more coverage than ever before.

Extended scanning to include new file formats like PDFs

With large language models (LLMs), like GPT4.1-mini, it’s possible to scan PDFs alongside text and image content to ensure all the content posted on your platform adheres to your content policy guidelines. Now you can send PDFs as part of any content that is scanned by Checkstep and have it scored and analyzed by your moderation models.

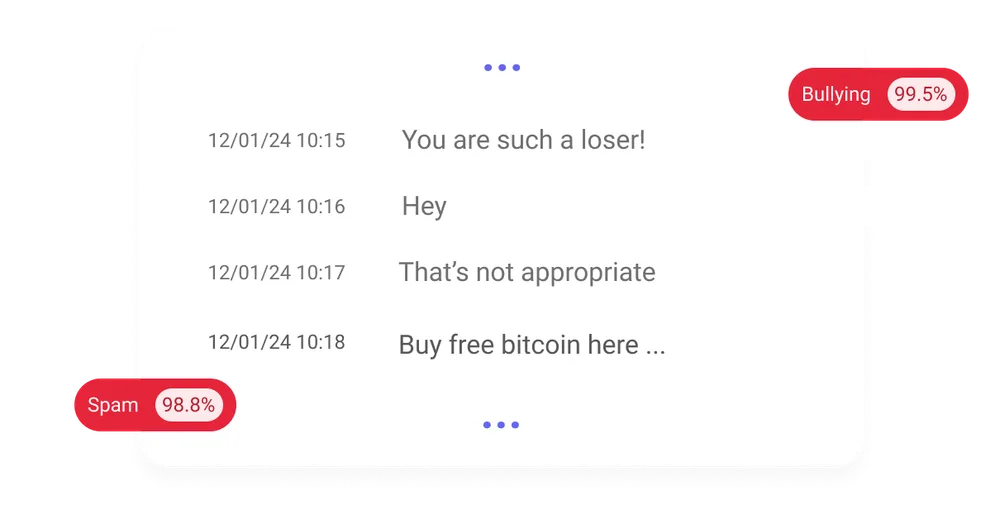

Chat scanning that reviews messages individually and contextually

Checkstep enhanced its low-latency (sub-100ms) chat models to support more contextual and non-contextual analysis of chat messages in parallel, producing more accurate decisions about harm in chat feeds. At real-time speed, customers can analyze and detect contextual harm more accurately than ever.

Faster human moderation decisions, supported by AI

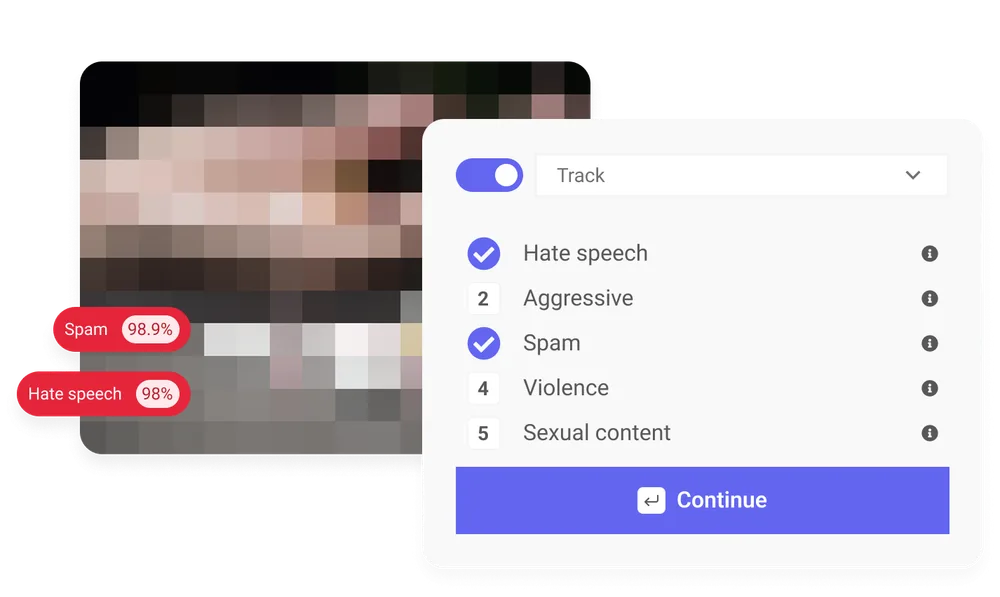

Streamlined Moderator Experiences

Checkstep launched a new moderator experience in September that makes it easier to view complex, multi-media content, to highlight what was flagged for review, and to view community reports and customer appeals. Combined with existing features for key-binded decision-making, the moderation UI cuts moderation time by up to 83%, boosting productivity across Trust and Safety teams.

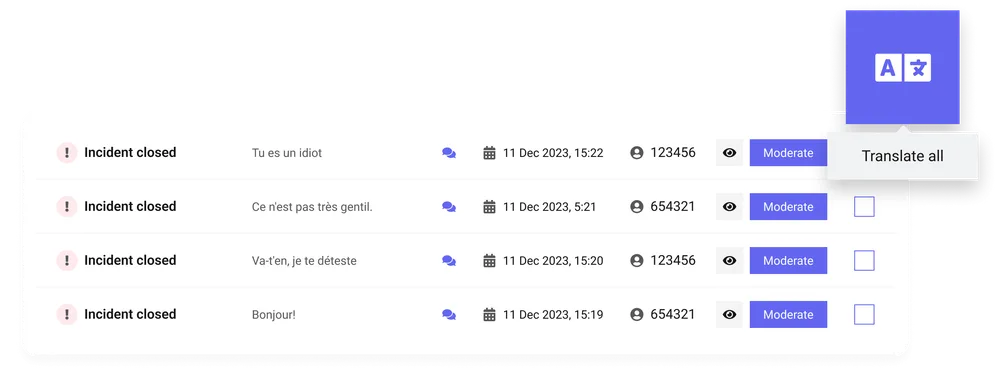

Enhanced Translation Features

In November, Checkstep improved bulk translation features so that customers could translate foreign language content more easily, particularly when reviewing and digging for insights in content decisions. While Checkstep has long supported easy translation within the UI, teams can now translate whole chat conversations at once.

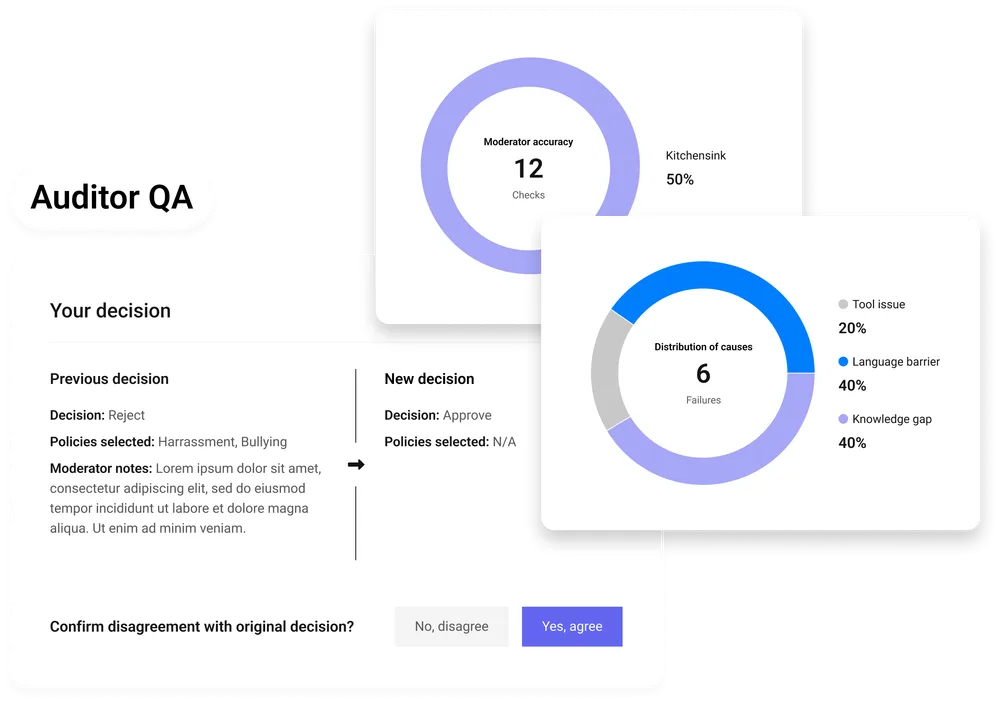

Deeper insights and capabilities to assess and improve quality

Tiered Quality Assessment

Checkstep launched new features to allow teams to run multiple levels of quality checks, giving large moderator groups the ability to assess and coach moderators AND assess and coach the quality assessors. This also allows teams to create double-verified golden datasets for testing and assessing any of their automated workflows.

easier data exports

Checkstep supports more detailed exports of moderation decisions and case data out of the Checkstep platform. Customers can visualize trends by theme and keywords in seconds and extract key information to drive business and policy decisions for their moderation system.

Book a demo to explore our range of features and discover how Checkstep can help you deliver better trust & safety operations for your platform.